WARNING: IF YOU HAVEN’T PLAYED SPEARMINT 1 YET, GO DOWNLOAD IT AND TRY IT OUT. IT’S A QUICK GAME; I’LL WAIT HERE FOR YOU.

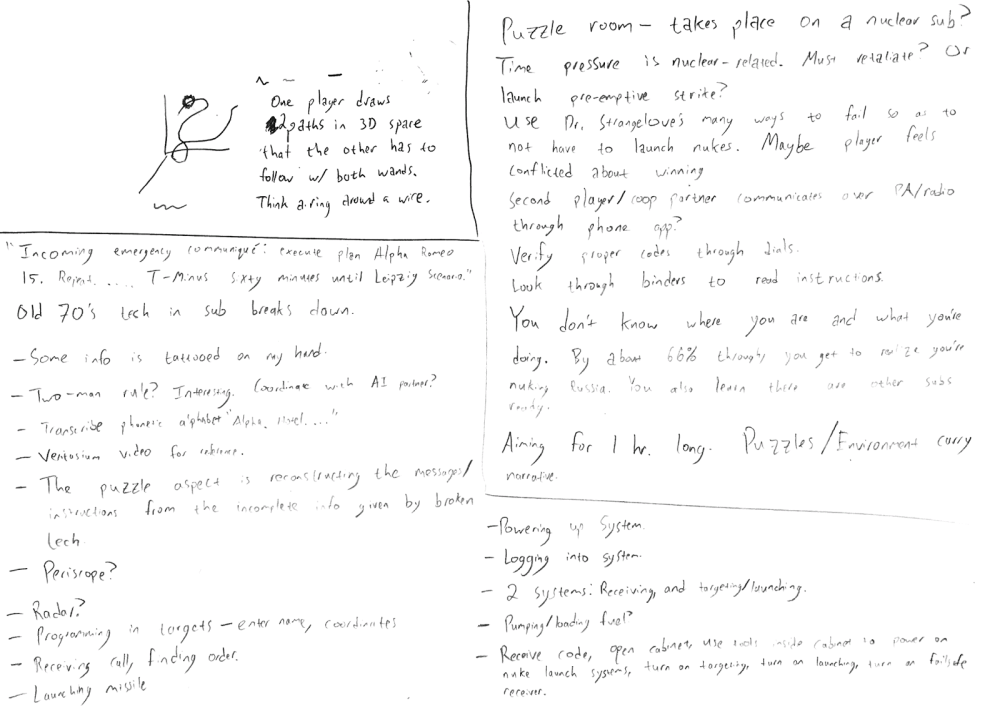

Spearmint 1 started as an experiment in making and releasing a game in 30 days. Instead, it took about 70 days. I’ll be posting later about the process of making the game and my learnings thereon. This essay instead focuses on what the game taught me about VR game design.

The motivating question for S1 was: “do small, intricate game-spaces work better for VR than open-area traversal?” Before VR, almost all 3D games challenged the player to traverse a large open world. You see this in almost every 3D game, whether or not it’s an “open-world” game.

Developers in VR have sometimes taken the need for large spaces for granted, leading to the many developers trying to understand how to “solve the locomotion problem” without creating nausea (1, 2, 3). The state of the art solution is teleportation. However, I’ve found that when playing a game that relied on teleportation, I stop walking around my play space and lose the physical connection to the game that makes VR so special. In short, the headset just becomes a screen.

This led me to ask: what if I don’t use any teleportation and instead focus on a dense game world that fits inside a living room? This led to the escape-room game you see in Spearmint 1. The game’s theming, puzzle design, and environment detailing were built in service of this main investigation.

The rest of this essay focuses on some interesting successes and failures gleaned in building this game. Here are some questions to ask yourself before continuing on:

- Do you still remember the layout of the space? Is this memory more or less detailed than other VR games you’ve played?

- Did you feel claustrophobic or constrained? Did you find yourself wondering what was outside the walls?

- Are there other game types that lend themselves to small spaces? Can they be adapted into VR well?

Interesting Success #1: Sense of space

In many ways, the original question about small, intricate game-spaces was answered to my satisfaction. The small room that I created seems to become a real space for players. They know their way around it and explore the entire playspace. I found some good signs of this:

- Many playtesters tried to lean on the table while thinking through a puzzle. It wasn’t ethereal to them; it was a real object.

- People smiled during the wire puzzle when they had to stretch their arms across the room. The physicality was novel and enjoyable.

- A lot of players had fun just throwing the papers around the room and making a general mess.

Even though the room had to be small enough to fit within the average person’s living room, I didn’t run out of space to place things. Adding more detail and systems to the room could easily make for an experience 10x in length. An intricate puzzlebox of a room could be a real joy to play in. However, the intricacy of the space is directly related to time invested by the developer and how many assets they’re willing to create.

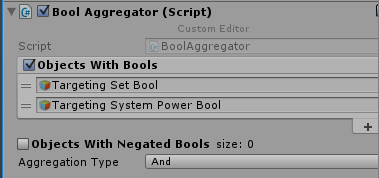

Interesting Success #2: Watchable booleans

This learning is focused more on Unity development and not VR in particular. “Watchable booleans” were an ergonomics solution that ended up really punching above their weight. Here’s the challenge: the game uses a bunch of small LEDs to indicate the state of systems in the room to the player. They’re all the same prefab, they just need to be hooked up to different things in the room.

UnityEvents seem like a good fit here, letting you tie the behavior of objects together from the editor very quickly. This is good when the same conditions on different objects should have different actions. For example, the condition of “pressing a button” can easily be tied to different actions. The MonoBehavior encapsulates the condition and you specify its action in the editor.

I created a somewhat analogous tool to UnityEvents: Watchable Booleans. They’re the inverse of the above picture — the action is encapsulated in the behavior, and you specify its condition in the editor. An LED can really only turn on or off; you then say in the editor what boolean value in the scene you want to mate that to. This let me set up the conditions for each light straight from the editor.

More details

Lights look for an object that implements IWatchableBool. Objects that implement IWatchableBool report one boolean value, which was generally some version of “am I activated?”

- Batteries report whether they’re charged.

- Safety switches report if they’ve been flipped.

- Dials report whether they’ve been set to the right value.

- Some Unity objects served simply as holders for invisible state. For example, turning the ignition while the battery is charged turns on the “Targeting System Power” object.

- Aggregators could perform the “AND” or “OR” operation on these parts of the system, and lights could watch these.

This is a slightly non-standard use of the Unity inspector; it doesn’t let you show interfaces in the inspector and the resulting pathways of conditions through aggregators can be hard to trace. However, this significantly improved my iteration time and made bugs much easier to track down.

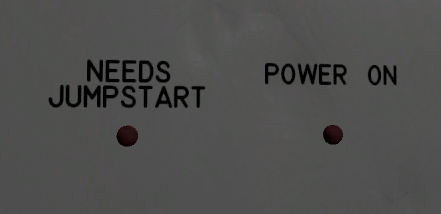

Interesting Failure #1: Tone and narrative

A big part of the original design is completely absent from the final game: the dark comedy tone. This tone fell naturally out of the puzzle design. And, by the same token, when those puzzles had to change, the tone left with them as well. This left a gaping hole in the feeling of the game.

Where did this dark comedy tone come from? The puzzles were going to be built off of the technology on the nuclear submarine being old, rundown, and broken. Some examples:

- The radio crackles while transmitting vital information, creating a puzzle for the player of eliminating impossible combinations.

- The safe would need to be broken into to retrieve the data.

- The targeting and launch systems are so old that they needed to be jumpstarted by a wire.

This created potential for a bit of a joke: when old technology breaks, people find workarounds. What’s the one system you don’t want people finding workarounds for?

Nuclear failsafes.

Early challenges would lead the player to a failsafe system that appears completely broken. The player, having been trained to subvert the systems on the sub, would then willingly bypass this failsafe in the pursuit of beating the game.

However, this fell apart as the game developed. I had to cut some of those early training challenges (they were bug-prone and confusing). The assets weren’t good enough to communicate that the sub was obsolete and rundown. Thus, when players came up to this broken failsafe, they hadn’t been trained to think laterally in previous puzzles.

All of these little cuts led to a bland tone in the game. Is it serious? Is it a comedy? It ends up feeling like a standard puzzle game, but in VR.

Interesting Failure #2: Physics and VR

A lot of VR games rely on physics for their gameplay. It makes sense at first blush; physics are familiar to players when they put on the headset, and most modern engines make it pretty easy to get started.

However, let’s be clear about something: developing with game physics sucks.

This glut of physics-based VR games has really brought the issues with game physics right back into view. Before VR, most 3D games didn’t rely on physics for much of their gameplay. Many of the issues with the physics were easy to ignore because they weren’t that important to the game; they were just eye candy.

So, what are the problems with building gameplay off of game physics?

Physics are hard to direct

Going from the mental picture of the interaction you want, to a robust, physics-based interaction can be a nightmare. For example, this ignition interaction in Spearmint 1:

It took a day of prototyping, building, and debugging to get this one interaction to work, and it’s still a bit unreliable. It looks nice and smooth, but behind the scenes we’re creating, configuring, and destroying multiple joints in those few seconds. Those joints are built off of the behemoth that is ConfigurableJoint. Anyone who says they understand all of its parameters and their interactions is lying to you:

They’re prone to weird bugs that are essentially undebuggable

PhysX is remarkably robust and accurate; it truly is shocking just how much of the time it looks right.

Despite this, any physics-based game is going to encounter its fair share of weird bugs.

- Maybe two objects pass right through each other because they’re going too fast.

- Perhaps thin objects don’t lie flush with other objects and start jiggling.

- Sometimes your fixed joints start to drift apart from each other because you’ve chained too many together.

If you’re lucky, the bug will happen at a time that doesn’t affect gameplay. However, there’s always the danger that your critical-path item will be sent flying by a weird interaction. These problems are significantly worse because the physics system is largely opaque to debugging without building something like this. The physics engine is a complex system that spins off unpredictable issues. That’s a tough foundation to build a game on.

Games require all sorts of weird, fundamentally wrong interactions

VR systems can’t yet impart forces back on the player. This is obvious, but causes problems when interacting with a physical simulation. This limitation leads to lots of little questions whose answers interact in messy, unphysical ways:

- Should I be able to put my hands through objects? If not, what happens when my in-game hands stop tracking my real-life hands?

- What happens if I grab an object with both hands and pull them apart?

- What happens if I grab an object that’s bolted down and pull on it? Should my hand separate from the object?

- How do I represent heaviness if all objects can be moved in the same way by my virtual hands?

- What happens when objects accidentally clip out of bounds? Should they respawn?

- How do I represent fine motor control without finger tracking?

- How do I pick up objects? Which button is it? Should it “snap” to my hand?

Any answer to the above questions is a non-physical workaround, and any combination of these answers is gonna have some weird, unintended consequence that feels wrong. There isn’t yet a set of conventional answers to the above questions, either, which means every game has to overtutorialize some of its interactions.

In Spearmint 1, I ran up against these issues all the time. Making small fixes for every interaction was a frustrating time-sink. Some examples I ran into:

- When pushing the wire against the battery, the ends of the wire would jump around and vibrate a lot because they had become separated from the physical hand holding them.

- Picking up the desired sheet from a stack of paper was a challenge for players, and picking up the wrong one sent the rest of the papers flying.

- When the keys had been inserted into the ignition, the keys would start to turn automatically as if the player had been starting the machine. This was because of an obscure orientation error in setting up the joints.

- At one point, the handles on the safe wheel would come off as you tried to turn the wheel. This was caused by setting up multiple chained fixed joints (the handles were fixed to the wheel, the wheel was fixed to the door, the door was fixed to the body). The solution was to lower the mass of the handles, which makes no physical sense but solved the issue.

For my next project, I’m leaning towards something less physical and more ethereal. If force/interaction is only one-way in the current VR systems, then perhaps games should reflect those limitations.

Interesting Failure #3: Text sucks in first-gen VR

This is a quick one. First-gen VR headsets aren’t hi-res enough to build games around text. Text can easily be blurry if the player has the wrong fit on their head and even then it has to be unreasonably large (think 48pt documents) to be readable. This doesn’t mean your game should have no text; it just means that you may not want to make it important to the gameplay.

Interesting Failure #4: Took too long to get playable

I should have known better, but I only had a fully playable version of the game 2 weeks before release. Getting playable early gives me more time to understand what parts of the game work and what parts need to be cut. It also motivates me more if I’m able to actually show people what I’m working on. So what went wrong?

The escape game genre doesn’t get to a playable state very early. The puzzles and interactions in escape games often rely on the actual asset being present. If your key doesn’t look like a key, people aren’t going to know what to do with it. A better approach is still a bit of a mystery for me — what does an early, prototype version of this escape game really look like? What was the real bare minimum I needed?

Parting Words

While I’m not head over heels about the final project, I’m thrilled to have something finished and uploaded. In the name of complete transparency, I’m also uploading all of my design documents and sketches for the game. If you’ve ever wanted to look into the head of another developer, here’s your chance. I’m also going to work on getting Spearmint 1 open sourced — there’s no reason for me to hide the code. I just have to work through the licensing issues around the assets.

After that, Spearmint 2 starts development soon. Hopefully I’ll scope a little better and pop it out in something like 30 days. Seeya then!